The AI agent developer platform is beta now

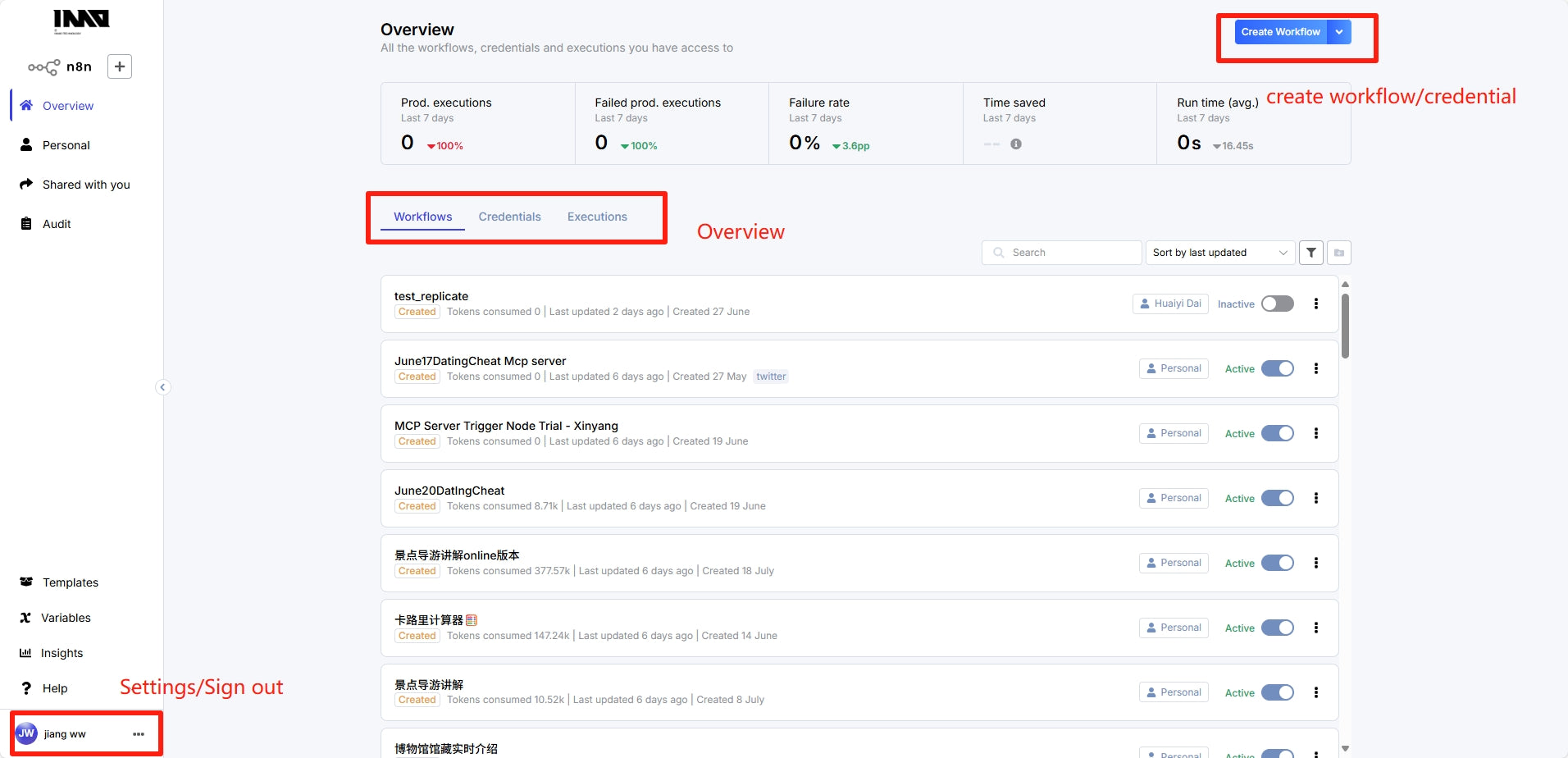

INMO Multimodal Agent Developer Platform is a low-code orchestration and one-click publish platform built to take AI glasses apps from development to on-device.

Deeply customized on n8n, it lets developers compose visual workflows that combine triggers (Chat/Webhook/APP Event), conversation memory, knowledge retrieval, mainstream models (OpenAI/Gemini/Claude/DeepSeek/Llama, etc.), and tools (HTTP, code execution, databases, MCP ecosystem) to produce text, images, audio, and video.

The platform natively bridges the glasses Super App: capture images/voice/text from INMO Air3, run Agent decisions with tools (e.g., vision, retrieval, TTS), then return structured results and media to the glasses—while controlling screen regions, volume, and progress bars.

It provides centralized credentials and access control, end-to-end logs and debugging, granular credits/token metering, and cloud or self-hosted deployment.

Target users are developers and integration teams who want to build AI apps on the platform and deploy rapidly to INMO glasses (currently supported: INMO Air3).

Benefits

-

Build → ship on-glass, fast: Visual assembly with starter templates, one-click publish/hot updates to the INMO Air3 Super App; control screen, volume, and progress bar on device.

-

Plug-and-play multimodality: INMO App Event Trigger/Chat Trigger connect directly to Air3 data streams; common chains like vision, ASR, TTS, and retrieval work out of the box.

-

Team-ready, governed access: Admins configure mainstream model API keys once for platform-wide reuse, while personal/social accounts remain isolated.

-

Observable and cost-transparent: End-to-end execution logs and error tracing, automated + manual reviews.

Share:

AR smart glasses brand INMO completes the pre-A round of financing worth tens of millions of yuan, led by Matrix Partners

What's difference between INMO Air3 and Meta Ray-ban Display Glasses